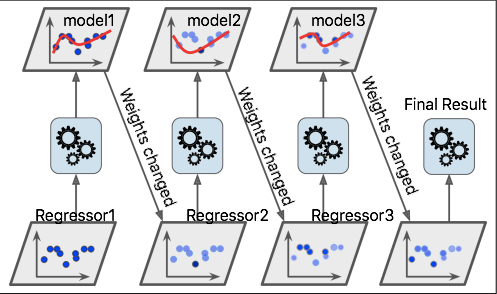

This signifies that actually these decision trees aren’t all identical and therefore they are able to capture distinct signals from the data. The nodes in each decision tree take a distinct subset of the features for picking out the best split. Now the question may pop into your mind, how do these dissimilar decision trees capture the different information from the data? Let’s understand how it happens. Now for an overview of various boosting algorithms: Gradient Boosting Machine (GBM):Ī GBM combines distinct decision trees’ predictions to bring out the final predictions. Boosting algorithms merge different simple models to generate the ultimate output. So what about boosting?īoosting is one method that uses the idea of ensemble learning.

The basic idea behind this approach is ensemble learning. If we do this, we will be capturing extra information from the dataset. Instead of using just one model on a dataset, lets combine the models and apply them on the dataset, taking the average of the predictions made by all the models. Regression is trying to capture the linear relation in the data, while KNN is classifying the data, etc. We know that the above three models all work in different ways.

The above respective models give you an accuracy of 82% and 66% on the validation set. Then you decide to implement one more model by building a decision tree and K-NN models on that data itself. Suppose you built a regression model that has an accuracy of 79% on the validation data. Before moving on to the different boosting algorithms let us first discuss what boosting is. Boosting algorithms are supervised learning algorithms that are mostly used in machine learning hackathons to increase the level of accuracy in the models.

0 kommentar(er)

0 kommentar(er)